Humanising robots

As construction begins on the new home of the Data Technology Institute, its Director, Professor Jon Oberlander, discusses artificial intelligence and the prospects for compassionate robots.

Artificial intelligence continues to make steady strides. We’ve seen IBM’s Deep Blue computer beat world chess champion Garry Kasparov; another IBM system – Watson – won a TV quiz show; Google DeepMind’s AlphaGo has recently come out on top in the game of Go. Self-driving cars are coming out of the labs and onto the streets.

My robotics colleagues in the Informatics Forum work with a menagerie of machines, including the dexterous Baxter robot, the mobile Youbot, and a collection of humanoids large and small, such as the Willow Garage PR2. The University has recently taken delivery of a NASA Valkyrie, one of the most advanced humanoid robots in the world, weighing in at 125kg and measuring 1.8 metres tall. The whole gang (researchers and robots) will move into the Data Technology Institute’s new home when it is complete in 2018 (see below).

In the current climate, robot carers are a staple in serious research grant proposals, as well as science fiction. They can take a variety of forms: advanced humanoid robots, cuddly robot pets, or all-seeing smart homes, studded with cameras and microphones. The idea is that they can assist people who have physical or cognitive problems, for instance helping an elderly person continue to live a relatively independent life in their own home. Such robots can perceive and respond to people’s emotions and moods, as well as their physical needs.

But can these robots actually be compassionate? This was one of the questions tackled at a workshop on the science of compassion I recently took part in, jointly organised by Edinburgh and Stanford Universities in California. Compassion is core to our shared humanity, and sees us respond to suffering by going out of our way to help our fellow humans.

My response is that – for now – robotic care is an oxymoron, a contradiction in terms. But there is a silver lining to that cloud: even current artificial intelligence can perhaps support human compassion in a valuable way.

Now with feeling

The main thing is that there’s a big problem with current robots. Their goal is to act compassionately: to perceive and respond suitably to emotional and physical needs. To do this, an artificial system does not need to actually have its own emotions.

The Paro robot seal is a smart cuddly toy, which can produce emotionally relevant responses, without having a complex emotional life of its own. Lots of other more complex robots are just like this: they do the right thing (usually), but they don’t have inner lives like ours.

And that’s the trouble. We rightly criticise humans – such as nurses and doctors and careworkers – if they just act as if they were compassionate, without feeling anything. In fact, we say that they are just “robots”, if they are going through the checklist without engaging. If “doing the right thing” isn’t enough for humans, how could it possibly be for artificial intelligences?

Jon Oberlander

Jon Oberlander has been Professor of Epistemics at the University of Edinburgh since 2005. He works on getting computers to talk (or write) like individual people, so his research involves studying how people express themselves – face to face or online –and building machines that can adapt themselves to people.

He collaborates with linguists, psychologists, computer scientists and social scientists, and has longstanding interests in the uses of technology in cultural heritage and the creative industries.

He is Director of the University’s new Data Technology Institute, Director of the Institute for Language, Cognition and Computation, and Co-Director of the Centre for Design Informatics.

Devices that deceive

As a side note, we can see a real hazard already looming for designers of intelligent systems: the designers know that the artificial agents don’t care. So is it ever ethical to let other people think that they do? In my own work, I have built and tested systems with some aspects of simulated human personality. If computers can respond to human events and messages with style and character, then they have a kind of social presence.

However, I have been convinced – by Dr Joanna Bryson (MSc Knowledge Based Systems 1992, MPhil 2001), an Edinburgh alumna now working at the University of Bath – that playing up social presence risks implying not just social agency but moral agency. And that is wrong, because current robots are just tools. So they are not responsible for their actions: their designers are. Arguably, we should not play up personality and social presence for now.

Could we make artificial intelligences that really did give and receive compassion? We would need at least two things, both a little tricky.

First, we need devices with the internal analogues of emotions. Beyond that, they may have to be able to reflect on what having the emotion means. At the workshop in Stanford, Edinburgh’s principal, Professor Timothy O’Shea, who has long-standing interests in machine learning, coined the term “artificial compassion” to cover what this kind of system might have to achieve. It’s like human compassion in the same way that machine learning is similar to – but not identical with – human learning.

Social acceptance

Secondly, to perceive and attribute compassion, we depend on a fundamental recognition of the joint humanity of carer and caree. My colleague Henry Thompson points out that to develop moral agency, we require co-participation in a range of social contexts, presupposing that moral agency is at least possible in principle.

It’s true that we allow children into these contexts as they grow up, as a means of teaching them moral values. But we do that because we know it works: we were once just like them, and we managed to become moral agents. So a robot would also have to be accepted into similar contexts, so that they can gain the right skills. And to be accepted, they would have to “look right”.

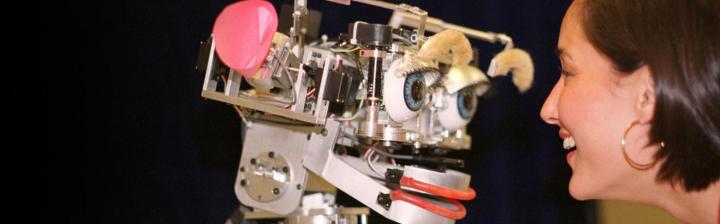

Looking right will help machines be accepted into normal human social contexts, so that they can then learn robust cognitive and moral skills. It’s not impossible to build robots that have artificial emotions and look human enough – Cynthia Breazeal’s Kismet robot at Massachusetts Institute of Technology was a great example.

But acceptance into the broader community may prove even harder than the technical challenges, because as recent events demonstrate, humans are still not great at extending a welcome to incomers who look a bit different. And that acceptance is critical.

So there is a long road from where we are now to robots that would be accepted as genuinely compassionate. But there is a consolation nearby: current generation artificial intelligence could be ready to help teach and cultivate compassion.

Robot as teacher

We already see intelligent tutoring systems with minimal intelligence being used to train individuals with social skills deficits, so as to learn how to deal better with other people. Such a system encodes best tutoring practice based on human experience, without having had any of those experiences itself.

On top of this, tutoring systems have been given the ability to recognise the emotions and moods of their tutees, to help guide their interventions. Putting these two elements together, even machines without true compassion could help teach people about compassion.

We can definitely develop intelligent tutoring systems that explore scenarios, advise, and make trainee human carers think harder, and feel harder. Such systems would be interactive compilations of human experience. And in that respect, they would follow in the footsteps of IBM’s Deep Blue and Watson, and Google DeepMind’s AlphaGo. Is the time ripe for Deep Compassion?

The Data Technology Institute

The University's Data Technology Institute (DTI) is a new hub to foster data-driven innovation. Its programme of activity will capitalise on existing strengths across the University, and through alliances with like-minded organisations will position Edinburgh as a global leader in data.

From 2018, the DTI will be housed in a new building that is the third and final phase of the Potterrow development, following completion of the Informatics Forum and the Dugald Stewart Building in 2008.

As well as housing Informatics’ robots, the new building will be home to a broad group of occupants from across the University, start-up and spin-out companies, and industrial collaborators.

Professor to judge Robot Wars

Professor Sethu Vijayakumar is to be a judge on Robot Wars when the cult TV show returns to the BBC later in 2016.

Professor Vijayakumar holds a Personal Chair in Robotics in the School of Informatics and is Director of the Edinburgh Centre for Robotics, a collaboration between the University of Edinburgh and Heriot-Watt University. He was the 2015 winner of the Tam Dalyell Prize for Excellence in Engaging the Public with Science.

Robot Wars first ran on the BBC between 1998 and 2001, and had a loyal and enthusiastic following. At its peak the programme had 6 million viewers, and the format became a worldwide success, showing in 45 countries.

In the programme, amateur and professional robot builders pitch their machines against each other, aiming to destroy or disable their opponents, or eject them from the arena, while surviving attacks from “house robots” as well as their competitors.

Professor Vijayakumar says: “We have just completed filming in the purpose built, bullet-proof arena and it is going to be a spectacle!”

Having recently also been involved in launching the BBC micro:bit initiative to encourage school children to begin programming, he adds: “It is particularly inspiring to see so many young kids and girls engage with the engineering and science behind it; there is no better way to draw them in and make them curious, so I am very proud to be part of Robot Wars along with the BBC micro:bit launch just last month.”

Robot Wars, to be shown on BBC 2 and hosted by Dara Ó Briain and Angela Scanlon, will include new versions of the house robots, with a raft of technological advances since the previous series.

History Makers: Informatics

See our timeline charting the development of Edinburgh’s world-leading status in the field that is today known as Informatics. And why not suggest historic events or people to add to our timeline?